On the first day of the EuroTox 2013 conference in Interlaken Switzerland, the workshop “Alternative test methods: challenges and regulatory application”, had contributions from the “holy trinity” of regulators, industry and academia. A lively debate ensued.

In 2004 when it was decided to introduce the ban, it was envisaged that the state of the science would have progressed beyond where it is now. While a number of alternative methods do exist, none are standardised and no single approach can be considered the “best” option in general. Rather, the best approach is based on the particular application being considered and the data available. So standardising regulatory requirements is, understandably, a tricky process.

The ban on animal testing in cosmetics does not mean that alternative approaches aren’t needed in other areas. Pesticides, pharmaceuticals, REACH, biocides, product safety and general research are all affected by Directive 2010/63/EU: “on the protection of animals used for scientific purposes”.

Researchers working in all of these areas require alternative methods in order in to save time and costs when considering chemicals as potential candidates for the host of applications they are used in the world around us.

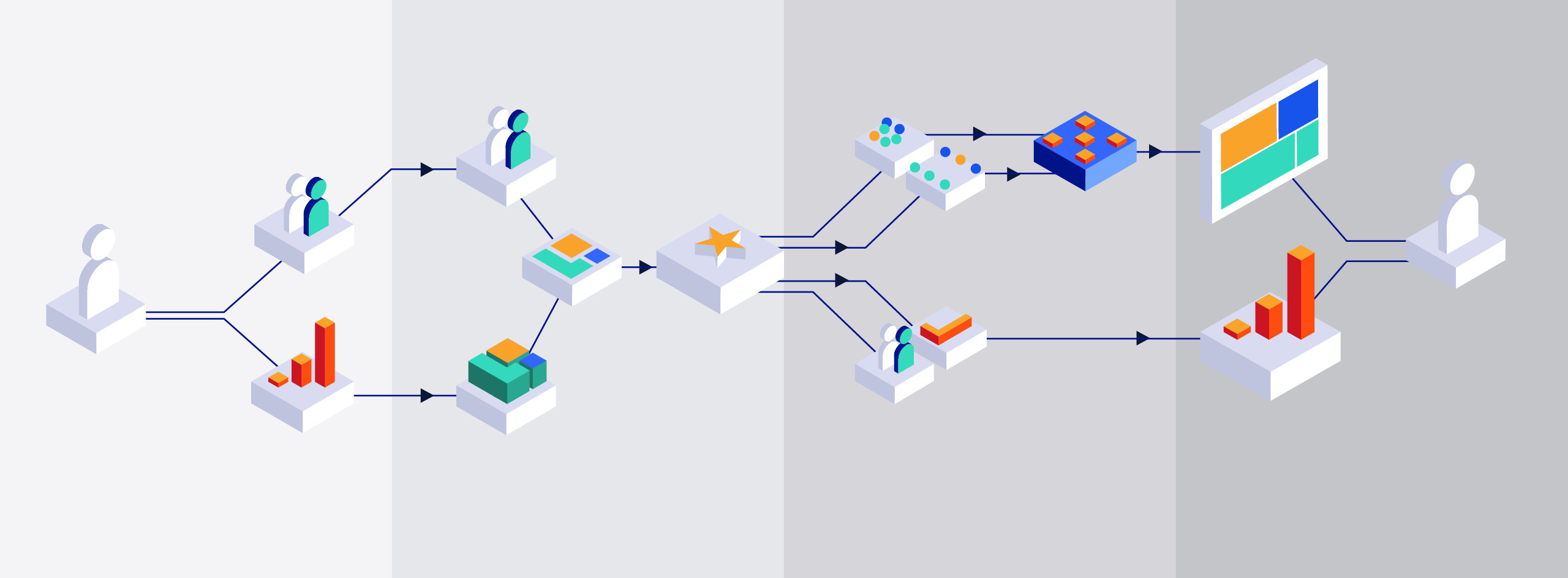

A number of alternative approaches exist currently, all of which can be applied with varying levels of success depending on what is already known.

Computational approaches (or in silico toxicology) like Quantitative Structure Activity Relationships or QSAR attempt to predict the likely toxicity of a chemical by training a model using set of predictor compounds for which the biological effect is known.

Toxicokinetics is a set of quantitative models that try to determine the fate of a chemical once it enters the body, usually focusing on the Absorption, Distribution, Metabolism and Excretion of the chemical (ADME, or what the body does to the chemical).

Toxicodynamics on the other hand attempts to model what happens at the biological site of action of the chemical within the body (i.e. what the chemical does to the body).

Other approaches rely on in vitro data which is data taken from isolated biological tissues or cells in a lab. In many cases all the approaches are combined to glean as much knowledge as possible about a chemical.

As an illustration of how central this issue is to the field of toxicology, as part of the conference a debate was held where the following motion was tabled: “In the near foreseeable future, much of toxicity testing can be replaced by computational approaches”. After an entertaining and stimulating discussion the motion was defeated by a large majority, showing how far away from this ideal situation experts in the field believe we are.

Despite this, even the opposing debater acknowledged the importance of computational and how we must continue to aspire towards developing them. It is not a question of if, but when. So what is beyond question is that modelling or in silico approaches to toxicology are here to stay, and they will require significant data, computational power, and expertise in order to drive them forward into the 21st century.